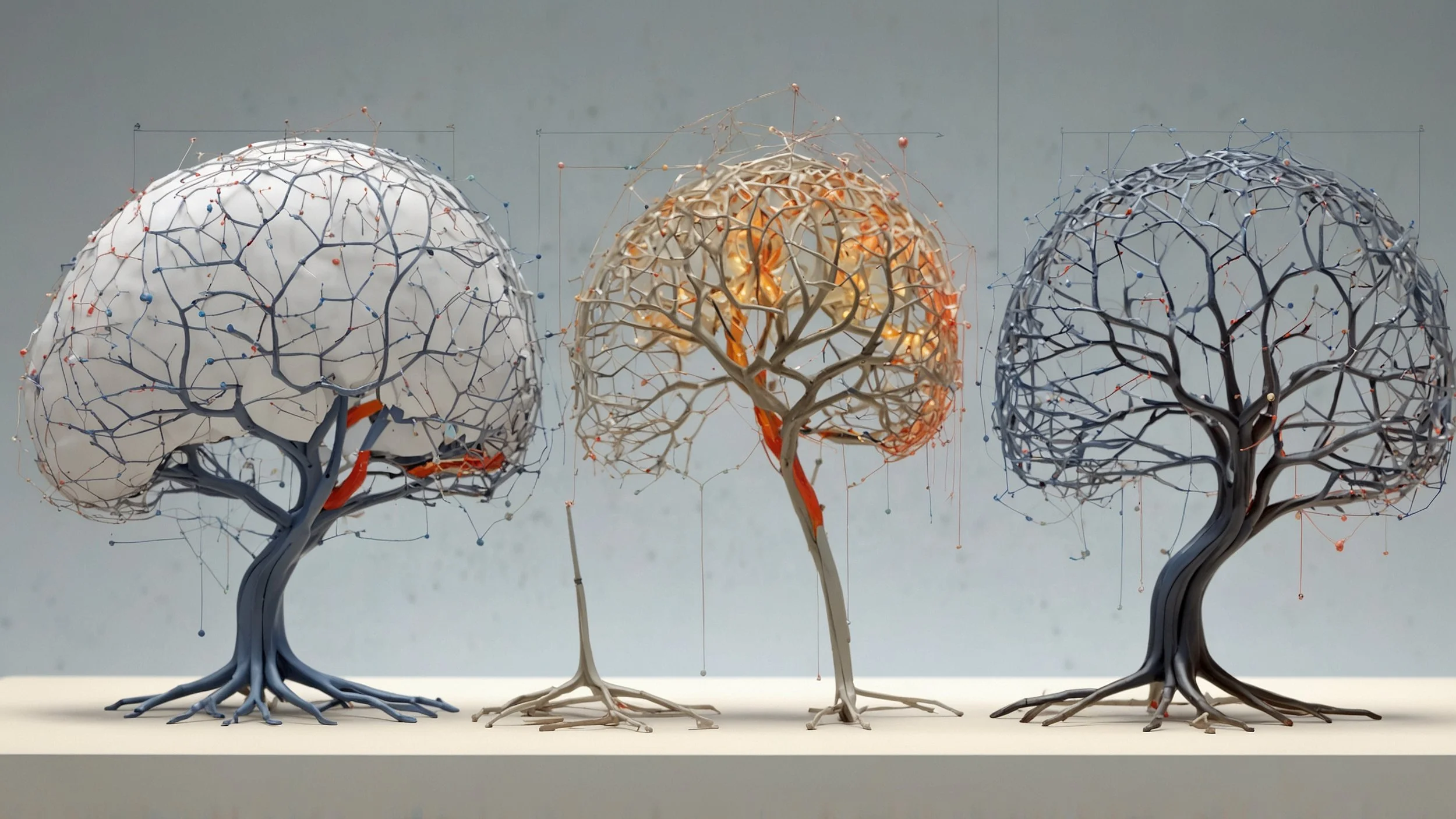

Explainable artificial intelligence: key to transparency and trust

As artificial intelligence (AI) progresses, its role in areas such as healthcare, finance, and computer security becomes essential. However, the black box problem has raised ethical concerns by complicating the understanding of how decisions are made. This is where Explainable Artificial Intelligence (XAI) comes in, attempting to make these technologies more accessible and understandable, thus ensuring ethical and responsible use.

The need for transparency in artificial intelligence

Explainable Artificial Intelligence attempts to address the inherent lack of transparency in complex AI systems. This method gives users the ability to not only observe, but understand, the causes of decisions produced by machine learning algorithms. Especially in vital areas such as healthcare and finance, this transparency makes it possible for experts to trust the suggestions and results obtained, strengthening the link between humans and machines and ensuring fair and evidence-based decisions.

Techniques for achieving explainability in artificial intelligence

In the field of artificial intelligence and machine learning, the explainability of models is crucial to ensure the confidence and understanding of their decisions. There are two fundamental strategies to achieve this explainability:

Intrinsic Approach: Employs interpretable models. Examples include decision trees, which present clear and easy-to-follow decision paths, and linear regressions, where the relationships between variables are explicit.

Posthoc methods: They are applied to complex models already trained. They use tools such as feature relevance analysis, which assess which features influence decisions the most.

Counterfactual explanations: They allow understanding the model by answering "what if" questions. They clarify how certain changes in the traits could alter the decision of the system.

Importance of Explainability: Facilitates user confidence. It allows developers and stakeholders to understand why a model makes certain decisions rather than others.

Practical Applications: Useful in critical sectors such as health and finance. They determine the impact of certain parameters and ensure fair and transparent decisions.

The challenges and future of explainable artificial intelligence

Although Explainable Artificial Intelligence (XAI) has great potential, it faces significant challenges:

Balance between accuracy and comprehensibility: Highly transparent models tend to be less accurate, which poses a dilemma when trying to explain complex decisions in an accessible way without sacrificing accuracy.

Lack of standard metrics: The absence of common indicators to measure the explainability of models makes uniform evaluation difficult, since what is understandable may vary considerably according to the profile of each user.

Transparency from the beginning: The future of XAI is oriented towards integrating transparency throughout the development process, which implies designing models that consider user demands from the outset.

Continuous adaptation: Incorporate constant feedback to the models to adjust both the performance and the explanations offered, ensuring that they are aligned with the needs and observations of the users.

User-centered approach: The need to develop systems that are not only technically sound, but also accessible and useful to those who use them, promoting a user-centered approach to improve interaction and interpretation of the results provided by AI.

Explainable artificial intelligence is a fundamental pillar for building a clearer and safer technological future. As Artificial Intelligence continues to be immersed in our daily lives, its ability to be understood and ethically valued becomes a societal imperative. XAI not only increases user safety, but also ensures more equitable and evidence-based choices, paving the way for a more conscious and inclusive use of technology.